PARTNR

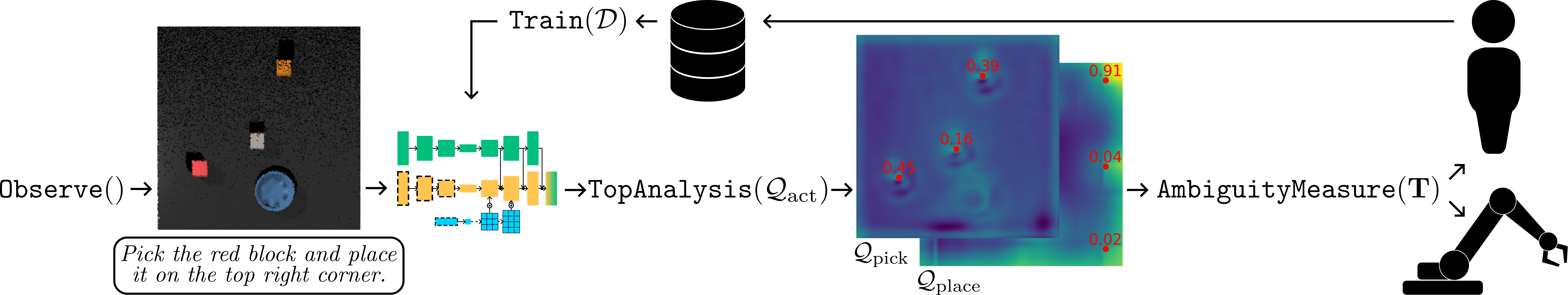

PARTNR is an interactive imitation learning algorithm that asks the human to take over control in case it considers the situation to be ambiguous. The situation is ambiguous when the learned policy does not provide a single dominant solution, i.e., there are multiple local maxima with close values in the action space. User demonstrations are aggregated to the dataset 𝒟 and used for subsequent training. The robot observes, at each execution step, a human-provided natural language command and the state of the environment (e.g., a top-view image of the table). Based on the observation, the policy provides the heatmap, representing the value of the action. The heatmap is then analyzed to detect multiple local maxima (in TopAnalysis). In this work, we rely on computational topology methods for finding local maxima, specifically we use a persistent homology method. Then, in AmbiguityMeasure, the obtained corresponding values of the local maxima T, are normalized using the softmax function and the maximum value is then used to decide if the situation is ambiguous. If AmbiguityMeasure(T) is smaller than a threshold value, the situation is ambiguous. In case the situation is ambiguous, the robot is not executing the policy but queries the human teacher. The threshold is updated continuously, at every step, by function UpdateThreshold, to satisfy a user defined sensitivity value. Whenever there is a teacher input, the data is aggregated and the policy is updated using the function Train.

Acknowledgements

This work was supported by the European Union’s H2020 project Open Deep Learning Toolkit for Robotics (OpenDR) under grant agreement #871449 and by the ERC Stg TERI, project reference #804907.

BibTeX

@inproceedings{Luijkx2022partnr,

author = {Luijkx, Jelle and Ajanovi{\'c}, Zlatan and Ferranti, Laura and Kober, Jens},

title = {{PARTNR: Pick and place Ambiguity Resolving by Trustworthy iNteractive leaRning}},

booktitle = {{5th NeurIPS Robot Learning Workshop: Trustworthy Robotics}},

year = {2022},

}